Building an IaC Security Scanner in Go

As a security engineer who’s implemented security controls across cloud environments, I’ve always been curious about how policy engines actually work under the hood. Sure, I could use existing tools like Checkov or tfsec, but there’s something to be said for understanding the fundamentals of how infrastructure security scanning really operates.

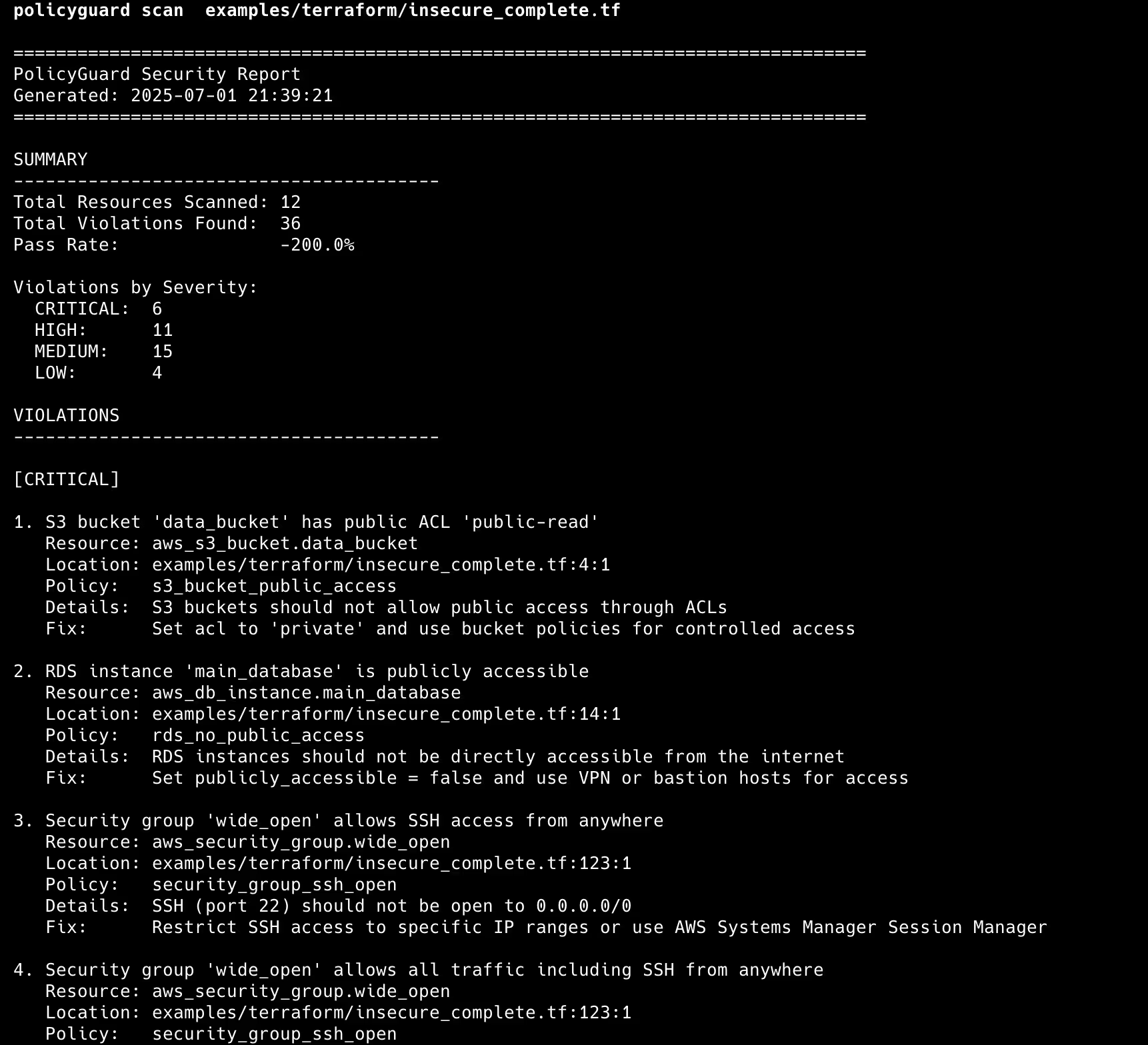

That curiosity led me down a rabbit hole (yes, a rabbit hole) that consumed weekends and resulted in PolicyGuard, an IaC security scanner built from scratch in Go. What started as a “let me just understand how this works” project turned into something that’s now catching security issues in infrastructure code.

The Problem That Started It

Working in cloud security, you see the same mistakes constantly. Last quarter alone, we caught 47 S3 buckets with public read-write access. Developers keep launching EC2 instances without encryption. One team had a security group allowing 0.0.0.0/0 on port 22 for three months.

The tools exist to catch this stuff, but I wanted to understand how they actually work. How does Checkov parse a 2000-line Terraform file in seconds? What magic lets tfsec understand that aws_s3_bucket_public_access_block relates to aws_s3_bucket?

The existing tools frustrated me. Checkov is great at parsing but adding custom policies feels like wrestling with Python decorators. Terrascan has a powerful policy engine but chokes on our modularized Terraform setup. I figured I could learn more by building something from scratch than complaining about existing tools.

Why Go Made Sense

Coming from a background heavy in Python for enterprise tools, Go wasn’t my first instinct. But for a security tool that needs to parse configuration files, evaluate policies, and potentially run in CI/CD pipelines, Go’s characteristics became compelling.

The concurrency model meant I could parse multiple Terraform files simultaneously without the complexity of managing thread pools. I would probably have a separate write-up detailing my experience here. The static typing caught errors at compile time that would have been runtime surprises in Python. Most importantly, the single binary deployment meant no dependency hell when installing the tool in different environments.

The learning curve was steeper than expected, though. Go’s interface system felt foreign coming from object-orientated languages. I spent more time than I’d like to admit getting comfortable with how interfaces work for dependency injection and testing.

Parsing Infrastructure as Code? Harder Than It Looks

The first major challenge was parsing Terraform files. HCL (HashiCorp Configuration Language) looks simple on the surface, but the reality is more complex. There are subtle differences between .tf files and .tf.json files. Variable interpolations can be deeply nested. Data sources reference other resources in ways that aren’t immediately obvious.

I initially tried to build a simple parser that would extract resource blocks and their attributes. That worked for basic cases but fell apart quickly when encountering real-world Terraform code with modules, complex variable references, and conditional resource creation.

Using HashiCorp’s own HCL parsing library made my workload much easier. Instead of reinventing the wheel, I could leverage the same parsing logic that Terraform itself uses. This handled all the edge cases I was encountering.

But then came OpenTofu support. When the Terraform fork emerged, I realized the tool should support both ecosystems. Fortunately, since OpenTofu maintains compatibility with HCL syntax, adding support was mostly seamless.

The Policy Engine Challenge

For policy evaluation, I chose OPA (Open Policy Agent) and its Rego language. This decision came from seeing OPA’s adoption in Kubernetes environments and wanting to understand how declarative policy languages work in practice.

Rego has a learning curve that’s definitely non-trivial. Coming from imperative programming languages, thinking in terms of rules and constraints rather than step-by-step instructions required a mental shift. The debugging experience is also quite different from traditional programming.

Writing security policies in Rego meant really understanding the structure of Terraform resources. For S3 bucket policies, I had to account for different ways encryption can be configured, various ACL settings, and the interplay between bucket policies and public access blocks. Each AWS service has its own quirks and security considerations that need to be encoded into policy rules.

The interesting part was discovering how to make policies extensible. Users should be able to drop in new .rego files without recompiling the entire tool. This required designing the policy loading system to dynamically discover and compile policy files at runtime.

Testing Infrastructure Code

Testing a security scanner presented unique challenges. Unit tests were straightforward for individual components, but integration testing required actual Terraform files with known security issues. I had to create a test section with deliberately insecure infrastructure configurations.

The coverage metrics were initially disappointing. Testing policy evaluation meant creating extensive test cases for different resource configurations and ensuring that violations were detected correctly. I learned that achieving meaningful test coverage in security tooling requires thinking beyond code coverage to scenario coverage.

One particular headache was testing the pass rate calculation. My first version gave me a -127% pass rate. Turns out I was counting total violations instead of unique resources. One S3 bucket with five issues counted as five failures. Took me an embarrassing amount of time staring at the math before I realized what was happening.

CI/CD Integration

Building the tool was one thing, but making it useful in actual development workflows was another challenge entirely. Modern development teams expect tools that integrate seamlessly with their existing CI/CD pipelines.

Supporting multiple output formats became a priority implementation. Security teams want SARIF format for GitHub Security tab integration. QA teams prefer JUnit XML for test reporting dashboards. Developers want human-readable output they can act on immediately. Each format has different requirements and expectations for how violations should be represented.

The GitHub Actions integration revealed issues I hadn’t anticipated during local development. Path handling works differently in different operating systems. Environment variable handling has subtle differences between local shells and CI environments. Windows support required additional testing since most of my development was on macOS.

Getting the automated release pipeline working was its own journey. I wanted the tool to be easily installable via “go install” which meant publishing to GitHub Packages and ensuring proper semantic versioning. The number of edge cases in release automation workflows was humbling.

Considerations on Performance

As the tool matured, performance became a consideration. Parsing large Terraform configurations can be memory-intensive, especially when dealing with generated files from tools like Terragrunt. Policy evaluation scales with the number of resources and the complexity of policies.

I implemented concurrent processing for parsing multiple files but had to be careful about resource contention when multiple goroutines were evaluating policies simultaneously. The OPA engine has its own performance characteristics that needed to be understood and worked around.

Caching became important for developer workflows where the same files are scanned repeatedly during development cycles. But cache invalidation is tricky when policies can be updated independently of the infrastructure code being scanned.

Human Side of Security Tooling

Here’s what nobody tells you about building security tools. The code is the easy part. The hard part is making developers actually want to use it.

Early feedback was brutal. “Your tool says my S3 bucket is insecure but doesn’t tell me how to fix it.” Fair point. So I added remediation suggestions. But then: “The fix broke our CI/CD pipeline because we actually need public access for static assets.” Another fair point.

Turns out security tools need to understand context. That “insecure” public S3 bucket might be serving your company’s logo. The wide-open security group could be for a honeypot. Generic security rules without context create more problems than they solve.

The error messages went through dozens of iterations. First version dumped full stack traces. Developers hated it. Too verbose version explained AWS security models in detail. Also hated - “just tell me what to change.” Final version shows the exact line to modify with the secure configuration. Much better.

Lessons Learned and What’s Next

Building PolicyGuard made me understand several insights about security tooling and Go development. The importance of good abstractions became clear when adding support for different IaC formats and output types. Interfaces in Go really does shine when you need to support multiple implementations of similar functionality.

The value of testing in security tools cannot be overstated. False positives undermine trust in automated security scanning. False negatives defeat the entire purpose. Getting the balance right requires extensive testing with real-world configurations.

Looking ahead, the Kubernetes support is next on the roadmap. Rather than building another custom parser, I’m exploring integration with existing Kubernetes MCP servers that already understand cluster configurations and security best practices. This approach could provide better coverage while reducing the development effort.

The Azure and GCP support will likely follow similar patterns to the AWS implementation, but each cloud provider has unique services and security models that will require consideration.

Building a security scanner from scratch was more educational than any course or tutorial could have been. Understanding how HCL parsing works, how policy engines evaluate rules, and how security violations should be presented to developers provided insights that are immediately applicable to other security tooling projects.

The Go ecosystem proved to be good for this type of system tool. The standard library handled most of the heavy lifting, and the third-party libraries filled gaps without creating dependency issues.

For anyone considering similar projects, my advice would be to start with the parsing and policy evaluation core, get that solid with proper testing, and then build the user experience and integrations around it. Security tooling is only as good as its adoption, and adoption depends heavily on developer experience.

The source code for PolicyGuard is available on GitHub for anyone interested in diving deeper into the implementation details, and I hope others can build on this foundation to make cloud security more accessible and effective.