Containers Changed Everything and I Wish I'd Started Sooner

I still remember the deployment that made me rethink everything. It was 2020, and I was trying to deploy a Python web app that worked perfectly on my MacBook. The production server was running Ubuntu 16.04 with a slightly different Python version. Different library versions. Different everything.

The deployment failed spectacularly. Three hours of troubleshooting revealed that a small difference in how the systems handled SSL certificates was breaking everything. “But it works on my machine” became my least favorite phrase that day.

That’s when containers clicked for me. Not as some abstract concept, but as the solution to a very real problem I’d just spent half my Saturday debugging.

The Problem That Drove Me Crazy

Picture this scenario that’ll sound familiar. You’re deploying a web application. Your laptop runs the latest macOS. Staging uses Ubuntu 18.04. Production? Some crusty CentOS 7 box that hasn’t been updated since 2019.

Each environment has different Python versions. Different SSL libraries. Different everything.

I’ve lost count of deployments that worked in staging but exploded in production. Usually at the worst possible moment. Like during a demo to the CEO. Or right before a critical customer launch.

Containers solved this nightmare. They package your application with everything it needs to run, creating a bubble of consistency that travels from your laptop to production unchanged.

What Actually Is a Container?

Here’s the analogy that made it click for me. You know those shipping containers that carry stuff across oceans? Same concept, but for software.

A container bundles your application with everything it needs. Code, runtime, libraries, system dependencies - everything. Your app gets its own private universe where all the pieces fit together perfectly.

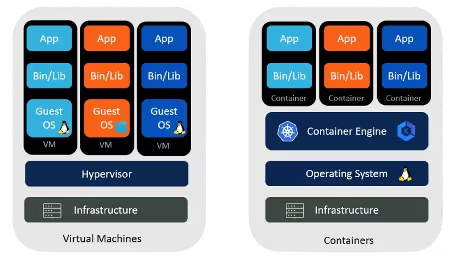

But here’s the clever bit - containers aren’t like virtual machines that need their own operating system. They share the host OS kernel while keeping applications isolated. It’s like having roommates who share the kitchen but have their own locked bedrooms.

This means containers start in seconds instead of minutes. Use megabytes instead of gigabytes. And run dozens on the same server without breaking a sweat.

The Three Things That Make Containers Work

Containers have three main pieces that work together. Nothing fancy, just solid engineering.

Container Engine: This is the workhorse. Docker is the famous one, but there are others. The engine builds containers, runs them, and keeps them from stepping on each other. It’s like a really good apartment manager - everyone gets their own space but shares the building’s utilities.

Container Images: These are your blueprints. An image captures everything your app needs in a single package. The cool part? Images never change once they’re built. What works on your laptop will work exactly the same in production. No more “but it worked on my machine” surprises.

Container Registry: Think GitHub but for container images. Docker Hub is the biggest one. Need a database? Someone’s already built a container image for that. Need to share your custom app setup? Push it to a registry and your team can pull it down instantly.

That’s it. Build an image, store it in a registry, run it with an engine. Simple enough that you can explain it to your manager without losing them in technical jargon.

Containers vs. Virtual Machines: Settling the Debate

You might be wondering, “Don’t virtual machines already do this?” It’s a fair question, and understanding the difference is crucial.

Virtual machines are like building entire houses for each application. Each VM includes a full operating system, virtual hardware, and then your application on top. It’s comprehensive but heavy. Starting a VM can take minutes, and each one might consume gigabytes of memory just for the operating system.

Containers, on the other hand, are more like efficient apartments in a well-designed building. They share the building’s infrastructure (the host OS kernel) but maintain their own private space. A container can start in seconds and might only need megabytes of memory beyond what the application itself requires.

This efficiency isn’t just about saving resources, it’s about agility. When you can spin up a new instance of your application in seconds rather than minutes, it changes how you think about scaling, testing, and deployment. Suddenly, running 100 copies of your application for a load test becomes practical, not prohibitive.

The Real-World Impact: Why Containers Matter

The true power of containers becomes clear when you see them in action. Take Netflix, for example. They run hundreds of microservices, each potentially requiring different versions of various libraries and tools. Without containers, managing this complexity would be a nightmare. With containers, each service lives in its own optimized environment, and deploying updates becomes as simple as swapping out container images.

Or consider a startup I worked with that was struggling with deployment consistency. Their application worked perfectly in development but crashed mysteriously in production. The culprit? A different version of a system library. After containerizing their application, these problems vanished. The container ensured that the same environment went from the developer’s laptop through testing and into production.

Containers also enable new architectural patterns. Microservices architecture, where applications are broken into small, independent services, becomes practical when each service can be containerized. You can update one service without affecting others, scale specific components based on demand, and even use different programming languages for various services, all because containers provide a consistent, isolated environment.

The Container Ecosystem: More Than Just Docker

While Docker popularized containers and remains the dominant player, the ecosystem has grown rich and diverse. Docker made containers accessible, wrapping complex Linux kernel features in a user-friendly package. Its success spawned an entire industry.

But Docker isn’t alone. CoreOS introduced rkt (pronounced “rocket”) as an alternative focused on security and standards compliance. Linux Containers (LXC) predates Docker and offers lower-level control for those who need it. Podman emerged as a “daemonless” alternative, addressing some of Docker’s architectural concerns.

Each technology has its strengths. Docker excels at developer experience and has the largest ecosystem. Rkt prioritizes security and composability. LXC offers fine-grained control. The diversity ensures that whatever your specific needs, there’s a container solution that fits.

What’s remarkable is how these technologies have standardized around common formats and APIs. The Open Container Initiative ensures that a container image built with one tool can generally run with another. This standardization prevents vendor lock-in and encourages innovation.

Containers in Practice: Transforming Development Workflows

The real magic happens when containers become part of your daily workflow. In continuous integration and continuous deployment (CI/CD) pipelines, containers ensure that the same environment used for development is used for testing and deployment.

Development teams can share complete environments as easily as sharing code. New team members can get a fully configured development environment running with a single command. No more spending days setting up tools and dependencies, pull the container image and start coding.

Testing becomes more reliable too. Each test run starts with a fresh container, ensuring that previous tests don’t contaminate results. You can run tests in parallel without conflicts, dramatically speeding up the feedback loop. Integration testing becomes simpler when you can spin up an entire stack of services in containers, creating a miniature version of your production environment on demand.

For deployment, containers enable sophisticated strategies that were previously too complex for most teams. Blue-green deployments, where you run two versions of your application side by side before switching traffic, become straightforward. Canary releases, where new versions are gradually rolled out to subsets of users, are just a matter of adjusting which containers receive traffic.

The Path Forward: Preparing for Kubernetes

As powerful as containers are, managing them at scale presents new challenges. When you have dozens or hundreds of containers across multiple servers, you need orchestration. How do you ensure containers are distributed efficiently across your infrastructure? How do you handle failures when a server goes down? How do you manage updates without downtime?

This is where Kubernetes comes into play. If containers are like shipping containers, Kubernetes is the port management system that ensures they get where they need to go. It handles the complex logistics of running containers at scale, from scheduling and scaling to networking and storage.

But that’s a story for our next article. For now, understanding containers gives you the foundation to appreciate why orchestration becomes necessary and how Kubernetes builds upon the container revolution.

Your Container Journey Starts Here

Containers represent a fundamental shift in how we think about software deployment. They’re not just a technical improvement; they’re an enabler of new ways of working, new architectures, and new possibilities.

The beauty of containers is that you can start small. Install Docker on your laptop and containerise a simple application. Experience firsthand how liberating it is to package an application once and know it will run anywhere. Feel the satisfaction of eliminating environment-specific bugs. Watch your deployment process become simpler and more reliable.

From there, the path leads naturally to more advanced topics. How do you optimise container images for size and security? How do you manage secrets and configuration? How do you monitor containerized applications? Each question leads to new learning and new capabilities.

What’s Next?

In our upcoming article, we’ll explore Kubernetes, the technology that takes containers from useful to transformative at scale. We’ll see how it solves the orchestration challenge and enables patterns like self-healing applications and automatic scaling.

But for now, take a moment to appreciate how far we’ve come. From the dark days of “works on my machine” to a world where applications can move seamlessly across any environment, containers have delivered on technology’s promise to make our lives simpler while enabling us to build more systems.