Inspector to SSM Vulnerability Patching Automation

AWS Inspector finds vulnerabilities but doesn’t patch them automatically. The obvious solution seems simple: wire Inspector → EventBridge → Systems Manager together and let automation handle the rest.

Except it breaks spectacularly in production.

A recent blog post demonstrated this pattern with a critical caveat buried in the middle: “full auto-patching didn’t complete due to overlapping runs + reboots when multiple findings existed.” That’s code for “it doesn’t work when you actually use it.” Which honestly surprised me at first.

Here’s what actually happens. Inspector scans an EC2 instance and finds 15 vulnerabilities. It fires 15 separate EventBridge events. Your automation tries to patch the same instance 15 times simultaneously.

Chaos.

The first patch operation starts, reboots the instance, and the other 14 operations fail. You get partial patches, broken services, and instances stuck in weird states.

The Race Condition Problem

Most examples ignore concurrency entirely. They assume one vulnerability per instance or perfect timing - fantasy scenarios that don’t exist in enterprise environments. (I still don’t understand why the basic examples skip this entirely.)

The solution requires deduplication with state management. DynamoDB provides this through conditional writes:

table.put_item(

Item=item,

ConditionExpression='attribute_not_exists(instance_id) OR #status <> :in_progress'

)First vulnerability wins the lock. Others get queued. No more simultaneous patch operations destroying your infrastructure.

What Production Actually Requires

The blog post approach patches immediately, regardless of business hours or system health. That’s like performing surgery without checking if the patient can survive it. Not great.

Pre-Patch Safety Validation

Production systems need safety nets before any patches get applied:

def validate_instance_health(instance_id):

"""Pre-patch validation implemented in Python via SSM Run Command"""

# Execute health checks via SSM

command_id = ssm.send_command(

InstanceIds=[instance_id],

DocumentName="AWS-RunShellScript",

Parameters={

'commands': [

# Disk space validation

'DISK_USAGE=$(df -h / | awk \'NR==2 {print $5}\' | sed \'s/%//\')',

'if [ $DISK_USAGE -gt 85 ]; then echo "ERROR: Insufficient disk space ($DISK_USAGE%)"; exit 1; fi',

# Memory validation

'FREE_MEM=$(free -m | awk \'NR==2{printf "%.1f", $7/$2*100}\')',

'if (( $(echo "$FREE_MEM < 10.0" | bc -l) )); then echo "ERROR: Low memory"; exit 1; fi',

# Critical services check

'for service in sshd systemd-networkd; do',

' if ! systemctl is-active --quiet $service; then',

' echo "ERROR: Critical service $service not running"; exit 1',

' fi',

'done'

]

}

)

return wait_for_command_completion(command_id['Command']['CommandId'])This Python-based validation runs via SSM Run Command, preventing patches on systems with insufficient resources or failed critical services. (Learned this one the hard way.)

Automated Backup Strategy

EBS snapshots before every patch operation provide a recovery path when things go wrong:

def create_snapshots(instance_id):

"""Create EBS snapshots before patching with 30-day TTL"""

try:

volumes = ec2.describe_volumes(

Filters=[{'Name': 'attachment.instance-id', 'Values': [instance_id]}]

)['Volumes']

snapshot_ids = []

for volume in volumes:

response = ec2.create_snapshot(

VolumeId=volume['VolumeId'],

Description=f'Pre-patch snapshot for {instance_id} - {volume["Attachments"][0]["Device"]}',

TagSpecifications=[{

'ResourceType': 'snapshot',

'Tags': [

{'Key': 'AutoDelete', 'Value': 'true'},

{'Key': 'Purpose', 'Value': 'PrePatchBackup'},

{'Key': 'InstanceId', 'Value': instance_id},

{'Key': 'DeleteAfter', 'Value': (datetime.now() + timedelta(days=30)).isoformat()}

]

}]

)

snapshot_ids.append(response['SnapshotId'])

return snapshot_ids

except Exception as e:

logger.error(f"Failed to create snapshots for {instance_id}: {str(e)}")

raiseSnapshots take seconds to create but can save hours of recovery time. Auto-delete tags ensure they clean themselves up after 30 days. Simple but effective.

Maintenance Window Awareness

The original approach patches whenever Inspector finds something. Fine for development, terrible for production workloads serving real users. Obviously.

Production systems have two scheduling options:

- AWS SSM Maintenance Windows: Native scheduling with powerful controls (concurrency, error thresholds, cross-account execution)

- Custom Lambda scheduler: Better for complex business logic, custom windows, or multi-account orchestration patterns

We chose the Lambda approach for flexibility, but SSM Maintenance Windows work great for simpler cases.

def is_in_maintenance_window():

"""Check if current time is within maintenance window (2-6 AM UTC)"""

current_hour = datetime.now(timezone.utc).hour

MAINTENANCE_START = 2 # 2 AM UTC

MAINTENANCE_END = 6 # 6 AM UTC

if MAINTENANCE_START > MAINTENANCE_END:

return current_hour >= MAINTENANCE_START or current_hour < MAINTENANCE_END

else:

return MAINTENANCE_START <= current_hour < MAINTENANCE_END

if not is_in_maintenance_window():

schedule_for_maintenance(instance_id, vulnerability)The scheduler Lambda runs on EventBridge cron (0 2-6 * * ? *) during the 2-6 AM UTC maintenance window, processing queued patches without disrupting business operations. (SSM Automation also supports rate controls with up to ~500 concurrent executions if you go the native route.)

Network Security Architecture

Most examples run Lambda functions with default networking, creating security gaps. Production systems require proper network isolation. This caught me off guard initially.

# VPC isolation for both Lambda functions

resource "aws_lambda_function" "patch_deduplication" {

vpc_config {

subnet_ids = aws_subnet.lambda_subnet[*].id

security_group_ids = [aws_security_group.lambda_sg.id]

}

}

resource "aws_lambda_function" "patch_maintenance_scheduler" {

vpc_config {

subnet_ids = aws_subnet.lambda_subnet[*].id

security_group_ids = [aws_security_group.lambda_sg.id]

}

}

# VPC endpoints for secure AWS service communication

resource "aws_vpc_endpoint" "ssm" {

vpc_id = aws_vpc.patch_automation_vpc.id

service_name = "com.amazonaws.${var.aws_region}.ssm"

vpc_endpoint_type = "Interface"

subnet_ids = aws_subnet.lambda_subnet[*].id

}

resource "aws_vpc_endpoint" "dynamodb" {

vpc_id = aws_vpc.patch_automation_vpc.id

service_name = "com.amazonaws.${var.aws_region}.dynamodb"

vpc_endpoint_type = "Gateway"

}Lambda functions communicate with AWS services through VPC endpoints without internet access. NAT Gateway provides outbound connectivity only when needed.

The Real Scalability Challenges

Three limitations surface when moving from proof-of-concept to production scale.

DynamoDB Throttling Under Load

Conditional writes create contention when hundreds of vulnerability events arrive simultaneously. Multiple Lambda functions competing for locks on the same partition key causes throttling.

Implementation detail: The current architecture uses attribute_not_exists(instance_id) OR #status <> :in_progress conditions. Under load, this creates a bottleneck where only one vulnerability event per instance can acquire the lock.

Scaling limits: Testing shows failures starting around 50+ simultaneous vulnerability events for the same instance. The solution involves exponential backoff with jitter, but there’s still a theoretical limit at ~200 events/second per instance.

Massive environments need sharding across multiple DynamoDB tables or alternative coordination mechanisms like SQS FIFO queues. Not ideal, but it works.

Inspector vs SSM Data Mismatches

Inspector uses Amazon’s vulnerability database, updated constantly. SSM Patch Manager relies on OS vendor repositories with different update schedules.

Real-world example: Inspector2 detects CVE-2024-1234 on an Ubuntu instance. The automation triggers but SSM finds no applicable patches because Ubuntu hasn’t released the security update yet. The operation completes “successfully” but the vulnerability remains unpatched. Frustrating.

Frequency: In our testing environment, ~15-20% of Inspector findings initially lacked available patches (this may vary by organization), particularly for:

- Zero-day vulnerabilities where patches lag detection

- Distribution-specific packages with different naming conventions

- Backported security fixes that don’t match CVE identifiers exactly

You can mitigate mismatches using tailored patch baselines with auto-approval settings, maintenance windows to delay patching until vendors release fixes, and Security Hub workflows for manual review. Still no out-of-the-box CVE-to-patch resolver though.

EventBridge Throughput Limits

Inspector can generate thousands of findings during initial scans. EventBridge has default quotas of 18,750 invocations/sec and 10,000 PutEvents/sec in us-east-1 that cause throttling (delayed delivery, not silent loss) during these spikes.

You need quota increases, retry logic, and Dead Letter Queues for proper handling. The basic examples never encounter this because they test with single instances. Which is… unhelpful.

State Management

Traditional patching approaches are stateless. Each vulnerability event triggers independently with no coordination.

item = {

'instance_id': instance_id,

'status': 'IN_PROGRESS',

'started_at': datetime.now().isoformat(),

'vulnerabilities': [vulnerability],

'queued_vulnerabilities': [],

'expiration_time': int(time.time() + 7200), # 2 hour TTL

'lock_id': lock_id,

'request_id': request_id

}This tracks patch operations, prevents re-patching recently updated instances, queues additional vulnerabilities, and provides operational visibility. TTL ensures failed operations don’t block instances forever. (Two hour timeout seemed reasonable through testing.)

Instance Opt-In Control

Automated patching without consent can destroy critical systems. The solution requires explicit opt-in through tagging:

# Require explicit opt-in via tags - NO instances patched without consent

response = ec2.describe_instances(InstanceIds=[instance_id])

if not response['Reservations']:

logger.error(f"Instance {instance_id} not found")

return False

tags = response['Reservations'][0]['Instances'][0].get('Tags', [])

patch_enabled = any(tag['Key'] == 'AutoPatch' and tag['Value'].lower() == 'true' for tag in tags)

if not patch_enabled:

logger.info(f"Instance {instance_id} not opted in for auto-patching (missing AutoPatch=true tag)")

return FalseOnly instances tagged AutoPatch=true participate in automated patching. This prevents accidental patching of critical systems. Zero exceptions.

Post-Patch Validation

Patches aren’t successful just because they installed without errors. Services need to remain functional. Obviously.

# Post-patch service validation

for service in sshd apache2 nginx; do

if ! systemctl is-active --quiet $service; then

FAILED_SERVICES="$FAILED_SERVICES $service"

fi

done

if [ -n "$FAILED_SERVICES" ]; then

echo "ERROR: Critical services not running:$FAILED_SERVICES"

exit 1

fiThe automation validates that critical services restart correctly after patching. Failed validation triggers immediate alerts instead of waiting for monitoring systems to notice. Much faster.

Error Handling and Recovery

When things go wrong (and they do), recovery mechanisms are essential:

# Rollback assessment on failure

- name: RollbackAssessment

action: aws:executeScript

onFailure: step:NotifyFailure

Script: |

# Update state to failed and assess rollback options

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('PatchExecutionState')

table.update_item(

Key={'instance_id': events['InstanceId']},

UpdateExpression='SET #status = :failed',

ExpressionAttributeNames={'#status': 'status'},

ExpressionAttributeValues={':failed': 'FAILED'}

)Dead letter queues capture failed Lambda invocations. Detailed failure notifications provide actionable context. Automatic retry with exponential backoff handles transient failures. Standard stuff.

Monitoring and Observability

CloudWatch dashboards provide real-time visibility:

resource "aws_cloudwatch_dashboard" "patch_monitoring" {

dashboard_body = jsonencode({

widgets = [

{

properties = {

metrics = [

["AWS/Lambda", "Invocations", "FunctionName", aws_lambda_function.patch_deduplication.function_name],

[".", "Errors", ".", "."],

[".", "Duration", ".", "."]

]

title = "Lambda Function Metrics"

}

}

]

})

}Proactive alerting catches problems before they cascade. Success rates, failure reasons, and queue depths provide operational insight. Essential for debugging.

The Economics

Serverless architecture scales costs with usage. Quiet periods cost almost nothing. Major vulnerability events spike costs but remain predictable.

VPC endpoints eliminate NAT Gateway data transfer charges for AWS service calls. This saves hundreds of dollars monthly for organizations processing thousands of patch operations.

More importantly, automation provides consistency. Manual processes have human error, sick days, and vacation gaps. Automation doesn’t. Big win.

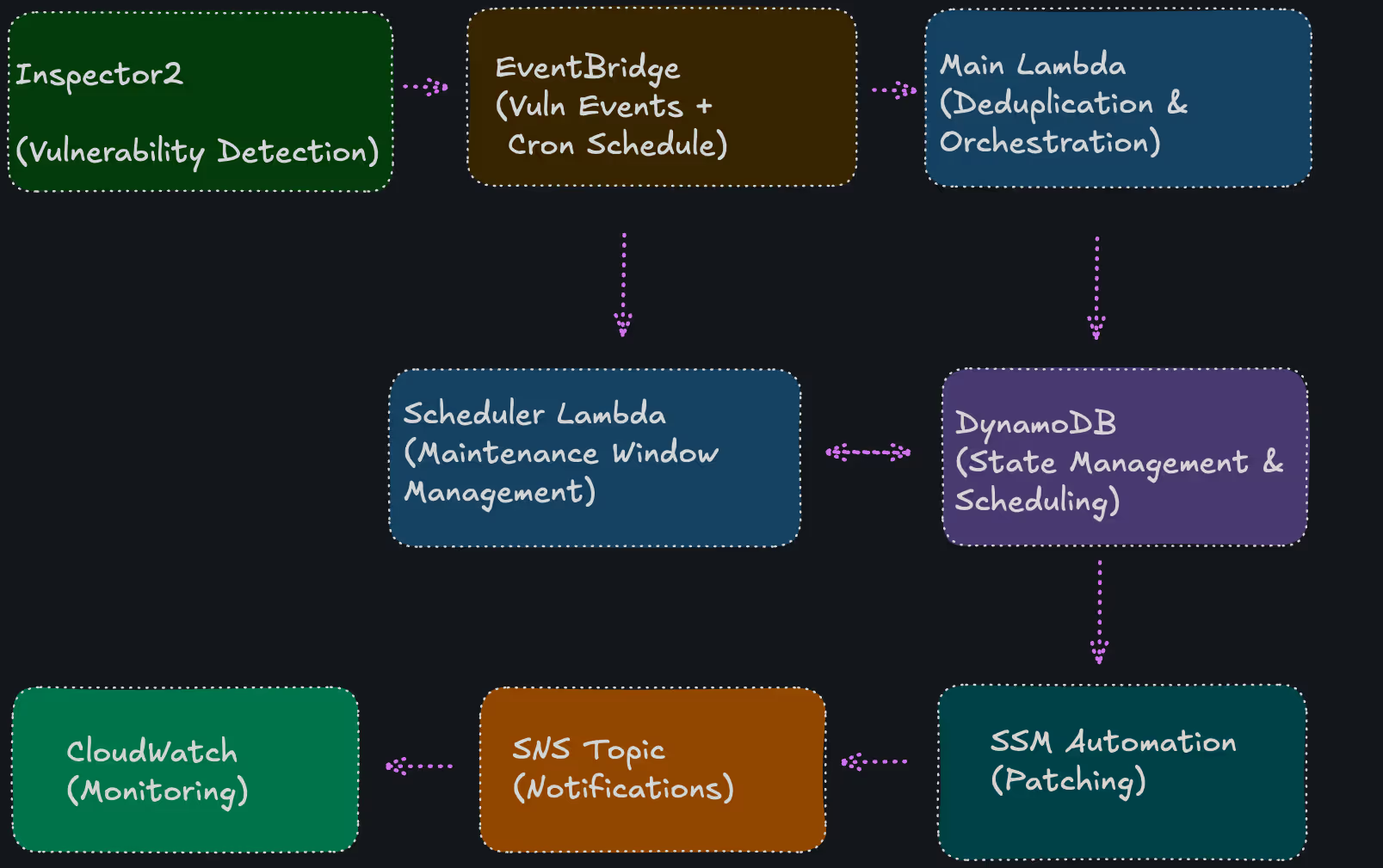

Architecture Layout

The blog post pattern is correct: Inspector → EventBridge → Systems Manager. But production requires comprehensive enhancements.

Flexible scheduling architecture works with custom Lambda for complex logic, or SSM Maintenance Windows for simpler cases. Deduplication prevents race conditions through DynamoDB conditional writes. Safety validation protects critical systems with pre-patch checks, while backup strategy enables recovery with automated EBS snapshots.

Maintenance windows respect business operations during 2-6 AM UTC. Network isolation follows security best practices with VPC endpoints. State management provides operational visibility with TTL cleanup. Explicit opt-in requires AutoPatch=true tag for safety.

Error handling includes DLQs, retry logic, and comprehensive logging. Quota awareness handles EventBridge throttling and proper scaling.

Building reliable infrastructure automation isn’t about getting the happy path working but also handling all the edge cases that could break production systems.

The key insight? Treat automation as a production system that requires the same engineering rigor as any other critical infrastructure component.

Security tools that break production systems create more risk than they mitigate. This architecture proves you can achieve both security objectives and operational reliability simultaneously. Which is the whole point.

Lessons Learned & Next Iteration

After sharing this with a couple of security engineers, several important improvements emerged that are worth implementing in future iterations.

Technical Corrections: EBS snapshots are asynchronous and can take hours depending on changed blocks, not “seconds” as stated. AWS-RunPatchBaseline reboots by default unless you specify RebootOption=NoReboot, which often leaves instances in pending-reboot states. Inspector rescans continuously, so deduplication should key off finding ARN + instance ID + maintenance window, not just timestamps.

Architecture Enhancements: An SQS FIFO queue between EventBridge and Lambda would provide better deduplication than conditional DynamoDB writes alone. Pre/post-hooks for load balancer drainage enable zero-downtime patching. Structured observability events for decision points (skipped, blocked, throttled) provide better operational insight than basic logs.

Security Hardening: IAM permissions need resource-level conditions for least privilege (e.g., tag-based ssm:SendCommand only on AutoPatch=true instances). Validation should verify configurations persist post-patch, not just service availability.

Scope Expansions: Container vulnerability “patching” means rebuilding images from patched base images, not OS-level patching. Windows environments need different SSM documents and approval workflows. ASG workloads might benefit more from AMI bake + Instance Refresh than in-place patching.

I believe these insights highlight the iterative nature of building production systems. The current architecture handles the core problem well, but there’s always room for improvement based on feedback and evolving requirements.

You can view the complete GitHub project from the link below - review it, contribute, comment, or use it as a starting point for your own implementation:

GitHub Repository: Autopatch-Vuln

The repo includes all Terraform configurations, Lambda functions, SSM automation documents, and deployment scripts discussed in this article.